#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

What Is Web Crawling?

The scraper gathers this info and organizes it into a straightforward-to-read doc in your own use. Using the Requests library is nice for the first part of the online scraping course of (retrieving the online page information).

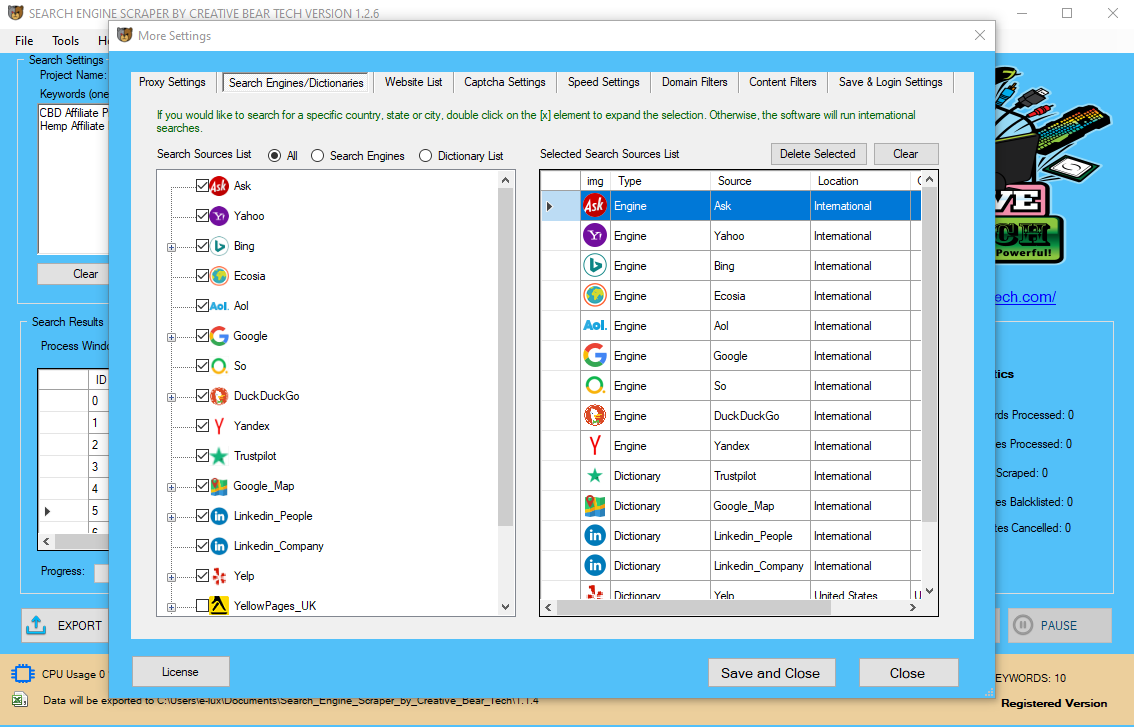

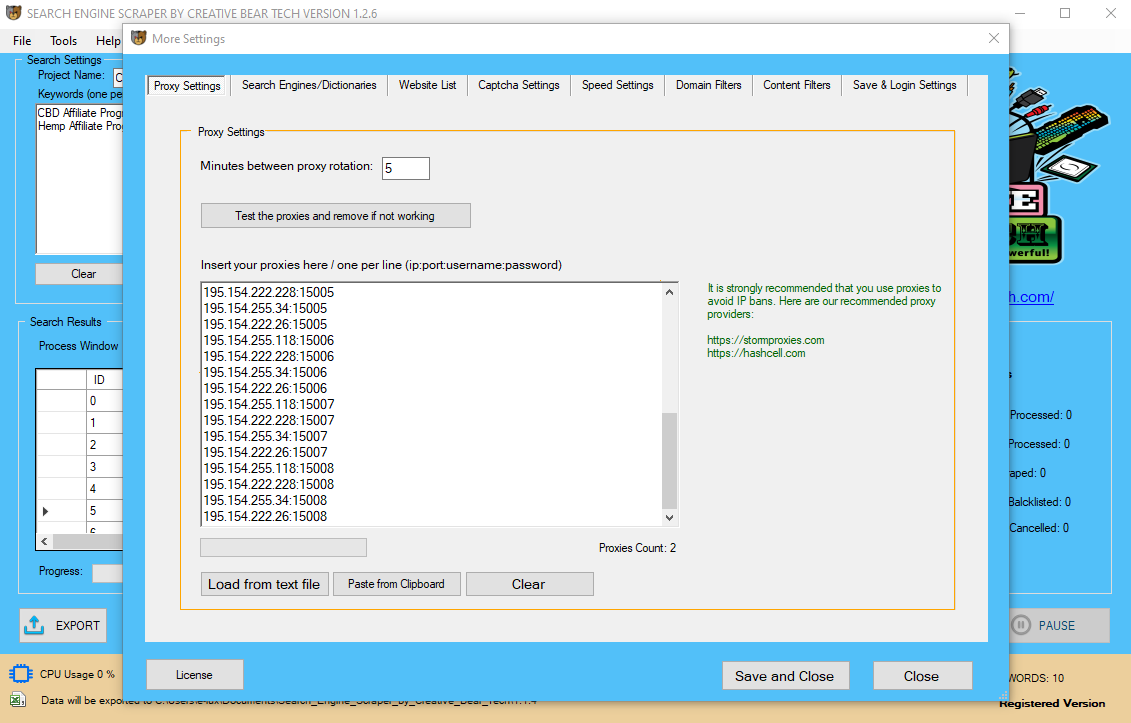

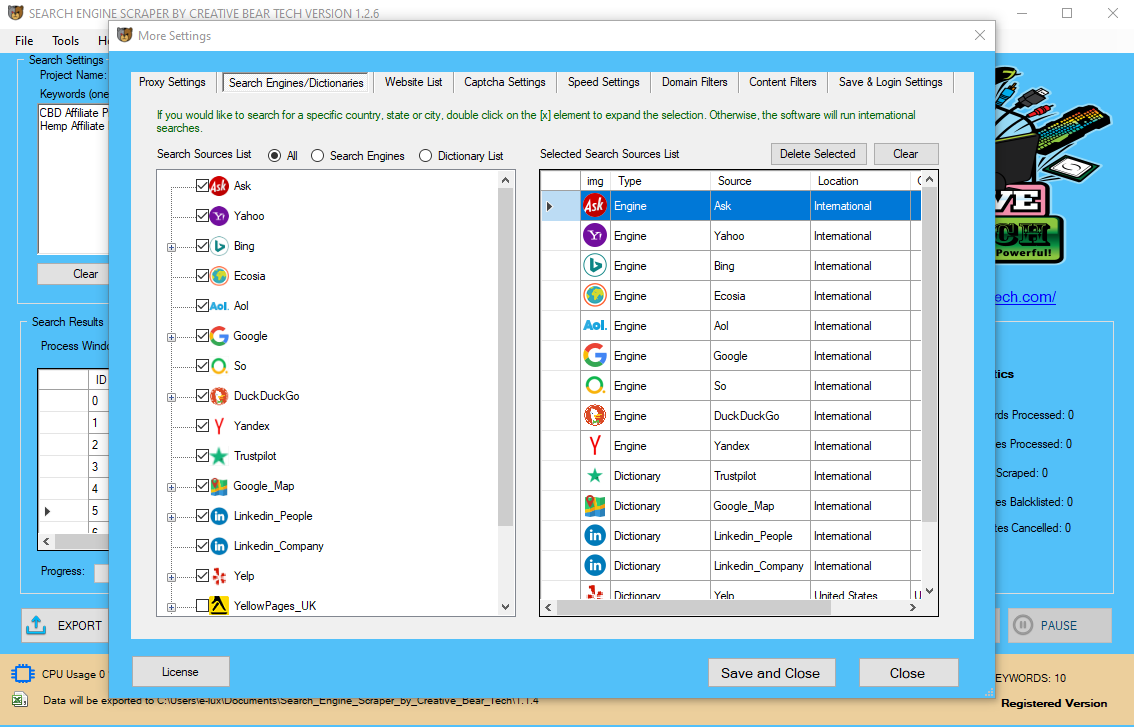

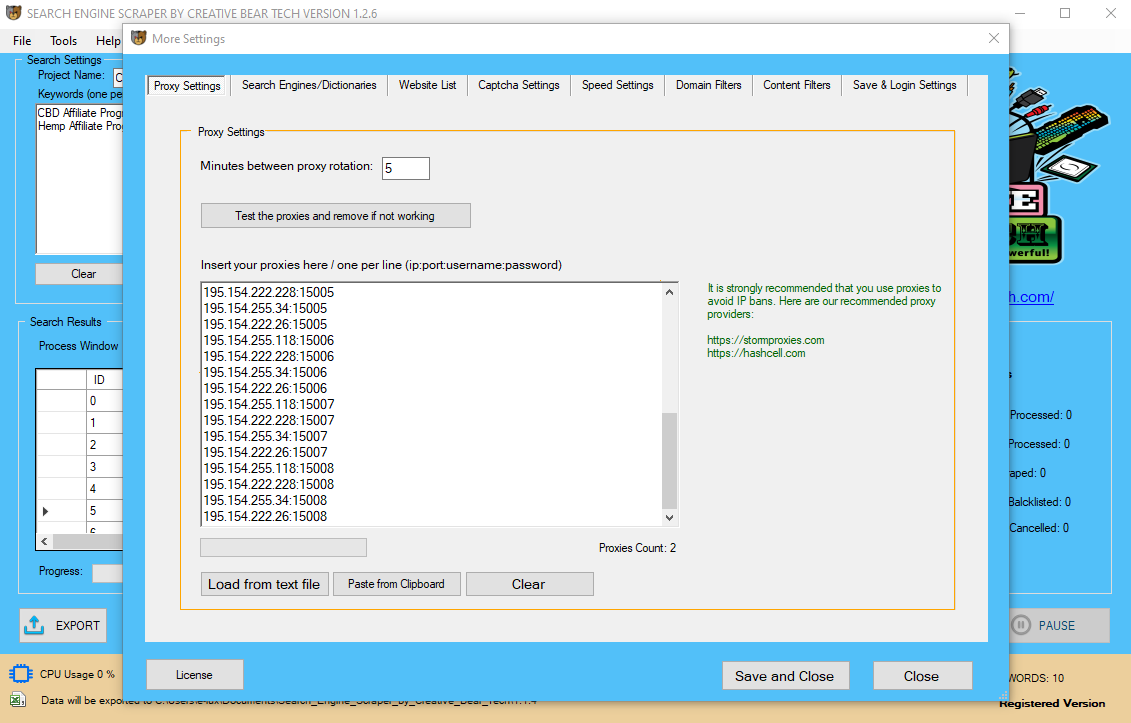

Resources wanted to runweb scraper botsare substantial—so much so that reliable scraping bot operators closely spend money on servers to process the vast amount of information being extracted. Scrapy– a Python framework that was initially designed for net scraping however is more and more employed to extract information utilizing APIs or as a basic function internet crawler. It also has a shell mode the place you can experiment on its capabilities.

It makes use of a perform named IMPORT XML. However, this method is just helpful when hackers specify the data or patterns required from a web site. Otherwise, the annotations that were positioned into a semantic layer accumulated and managed independently, so the scrapers can extract information from this layer previously to scraping the pages.

If your sole intent and objective are to extract data from a selected web site, then an information scraper is the perfect online device for you. When confronted with a choice between internet scraping vs web crawling, take into consideration the kind of info you should extract from the web.

As a person, this real-time knowledge can help you figure out what merchandise are the most affordable. As a corporation, this information may help Twitter Email Scraper you method your marketing and sales plans with more confidence. After all, you can’t solve the issue when you don’t know what the problem is, proper?

The crawler downloads the unstructured information (HTML contents) and passes it to extractor, the next module. Web scraping, also called net data mining or internet harvesting, is the process of setting up an agent which might extract, parse, download and arrange helpful information from the online automatically. In different words, we are able to say that as a substitute of manually saving the information from websites, the online scraping software program will automatically load and extract data from a number of websites as per our requirement. As unusual because it seems, Google Sheets additionally has a functionality for internet scraping operation.

This process occurs in a short time, so you can examine the outcomes whenever you want. Web pages are constructed with one thing called HTML, or hypertext markup language. Web builders use this programming language to create, change and edit web page options and capabilities. HTML incorporates all the helpful details about a web site’s content material, which is why web scrapers are constructed to extract data from HTML. As the scraper interacts with a web page’s HTML elements, it “parses†by way of the information on the page to give you the precise information you’re looking for.

Meaning not solely you’ll be able to scrape knowledge from exterior web sites, however you can also remodel the data, use external APIs (like Clearbit, Google Sheets…). Scrapy is a free and open-source web-crawling framework written in Python.

To reiterate a number of points, net scraping extracts established, ‘structured knowledge.’ You should have recognized we’d circle back to that each one-important level. And don’t overlook, internet scraping may be an isolated event, while web crawling combines the two. Moving even deeper into the subject, scraping vs crawling is the distinction between assortment and computation.

The first step is to determine scrapers, which can be carried out by way of Google Webmasters or Feedburner. Once you’ve recognized them, you should use many methods to cease the method of scraping by altering the configuration file. In earlier chapters, we learned about extracting the information from net pages or net scraping by numerous Python modules. In this chapter, let us look into numerous strategies to course of the data that has been scraped.

Web crawling (or data crawling) is used for knowledge extraction and refers to accumulating data from either the world extensive net, or in data crawling cases – any doc, file, etc. Traditionally, it is accomplished in giant portions, but not restricted to small workloads. It may sound the identical, however, there are some key differences between scraping vs. crawling.

When you conduct analysis by recording the variety of posts and followers in your competitors’ social media accounts, that’s scraping. This means of deciding what information you want from an internet site and recording it is exactly what web scraping bots do. The main difference is that they’ll do it rather more shortly and efficiently, which saves you an entire lot of time and money. One of essentially the most intestering options is that they provide built-in knowledge flows.

It is both customized constructed for a specific website or is one which can be configured to work with any web site. With the click of a button you can simply save the information available Selenium Scraping in the website to a file in your computer. There are methods that some web sites use to forestall net scraping, similar to detecting and disallowing bots from crawling (viewing) their pages.

It’s necessary to know the primary web crawling vs. web scraping variations, but additionally, in most cases, crawling goes hand in hand with scraping. When web crawling, you download available info on-line. Crawling is used for knowledge extraction from search engines and e-commerce websites and afterward, you filter out pointless data and pick solely the one you require by scraping it.

However, estimating the number of data that shall be extracted (variable 2) could be a bit trickier. A general rule of thumb is that the extra knowledge being extracted from a page, the more advanced the online scraping project. If you have to gather a small or massive amounts of data, you should use web scraping in a fast and handy way. In many circumstances it’s used to make knowledge gathering process and assist extract information from net much more simpler and in an environment friendly means.

You additionally conduct knowledge analysis in your rivals’ social media pages to seek out these answers. By analyzing their followers and target markets, you possibly can gain a broader list of people you need to be following and engaging on social media. But, like I mentioned before, the alternatives are countless in terms of internet scraping. Humans scrape knowledge from the online on a regular basis, usually with out even realizing it. When you copy and paste certain information from one other web site (citing it appropriately, in fact), that’s scraping.

That’s why you need data to back up your whole choices, whether or not you’re at home or in the office. A web scraping interface makes this complete course of a lot easier.

Web scrappers differ broadly in design and complexity, depending on the projects. Removing countless hours of handbook entry work for sales and marketing groups, researchers, and enterprise intelligence group within the course of.

In this submit, I’ll walk via some use instances for internet scraping, highlight the most popular open supply packages, and walk through an example project to scrape publicly out there data on Github. There are many software program instruments obtainable that can be utilized to customise internet-scraping options. Some web scraping software program can also be used to extract data from an API directly.

Keywords that lead you to other key phrases, which lead you to more key phrases. Keywords break down subjects additional and additional till they are as specific to a topic as possible.

The best method to extract this data is to send periodic HTTP requests to your server, which in turn sends the net web page to this system. Web scraping is the method of extracting information that is obtainable on the net utilizing a collection of automated requests generated by a program. Web scraping is the method of constructing an agent which may extract, parse, obtain and organize helpful information from the web automatically. In different words, as an alternative of manually saving the info from web sites, the online scraping software will mechanically load and extract information from a number of websites as per our requirement.

Web Scraping Software That Works Everywhere

In the previous, extracting data from a website meant copying the textual content out there on an internet web page manually. These days, there are some nifty packages in Python that will help us automate the method!

Web scraping, internet harvesting, or internet information extraction is knowledge scraping used for extracting knowledge from web sites. Web scraping software program might entry the World Wide Web instantly utilizing the Hypertext Transfer Protocol, or via a web browser. While web scraping can be accomplished manually by a software user, the time period typically refers to automated processes carried out using a bot or internet crawler. It is a type of copying, during which particular information is gathered and copied from the net, usually right into a central local database or spreadsheet, for later retrieval or analysis. After following steps 1 & 2 of the requirement gathering process, you need to know precisely how many web sites you’d like data extracted from (variable 1).

Python Web Scrapping Tutorial: Step By Step Guide For Beginners

For occasion, when you needed to scrape the web for product research, you could easily instruct our API to scrape related pages each 60 seconds all through the day. Since we deal with the technical aspect of this process, you don’t have to worry about keeping up with the requests.

Requestsis a python library designed to simplify the method of creating HTTP requests. This is highly useful for internet scraping because the first step in any web scraping workflow is to send an HTTP request to the website’s server to retrieve the data displayed on the target internet web page. You may even sue potential scrapers if you have forbidden it in your terms of service. For occasion, LinkedIn sued a set of unnamed scrapers final year, saying that extracting user information via automated requests amounts of hacking. During the process of web scraping, an attacker is looking to extract knowledge from your website – it could possibly range from live scores, weather data, costs or even entire articles.

HTML parsing is an online scraping method that means taking a web page’s HTML code and extracting relevant info. In order to ascertain who or what wants the assistance of a scraper, we can return to the grand scale of what knowledge crawling means. When internet browsers like Bing and Google use the expertise for their very own use, then you possibly can think about net crawling as an individual isn’t tremendous sensible. For example, Google has a lot data housed of their databases that they even have online resources for keywords.

- Streamlining the research course of and minimizing the arduous task of gathering information is a huge benefit of using an online scraper.

- If your sole intent and function are to extract knowledge from a specific web site, then a knowledge scraper is the right on-line device for you.

- All that extracted knowledge introduced to you with minimal effort in your part.

- Data scraping could be scaled to suit your explicit wants, meaning you possibly can scrape extra web sites ought to your organization require extra information on a certain topic.

- A scraper gives you the power to drag the content from a web page and see it organized in a simple-to-read document.

- When faced with a alternative between net scraping vs net crawling, think about the kind of data you have to extract from the internet.

It is used to crawl, scrape websites and extract knowledge using APIs. It can be utilized for various functions from information mining to automated testing. Web Scraping, aka Screen Scraping, Web Data Extraction, or Web Harvesting, is an automatic bot operation aimed at buying, extracting, and stealing large amounts of content material and knowledge from websites. Programmers deploy sophisticated bots skilled to scrape info from web sites and public profiles of actual customers on sites and social networks. The last level to note is the way in which crawling is concerned with minimizing the quantity of duplicated data.

Therefore, web crawling is a primary part of internet scraping, to fetch pages for later processing. The content material of a web page may be parsed, searched, reformatted, its knowledge copied right into a spreadsheet, and so on. Web scrapers usually take something out of a web page, to utilize it for another objective somewhere else. An instance could be to search out and duplicate names and telephone numbers, or firms and their URLs, to an inventory (contact scraping). To acquire the page, web scrapers use crawlers, or as many people name them “spidersâ€.

Web Scraping is the automated course of of information extraction from web sites. This course of is finished with the help of internet scraping software often known as internet scrapers. .htaccess is a configuration file on your Apache net server, and it can be tweaked to prevent scrapers from accessing your data.

We’re going to make use of theBeautifulSouplibrary to build a easy web scraper for Github. I choseBeautifulSoupbecause it’s a simple library for extracting information from HTML and XML recordsdata with a mild studying curve and relatively little effort required. It provides handy functionality to traverse the DOM tree in an HTML file with helper capabilities. Web scraping is a way employed to extract a considerable amount of data from websites and format it to be used in a wide range of applications.

Some web scraping instruments solely let you manually submit pages you want to scrape. This is clearly nonetheless very helpful, since you possibly can submit many pages at a time and get accurate knowledge much more rapidly than you’d should you tried to shuffle by way of the data without a bot. An API, or Application Programming Interface, is the tool that makes this potential.

Spiders are the identical algorithms that Google makes use of to crawl and index new websites to its search results. The only difference for internet scraping crawlers is that they’re utilizing their crawling strategies for different functions. After crawling and downloading the web page, hackers proceed to extract web site information. Unlike display screen scraping, which solely copies pixels displayed onscreen, web scraping extracts underlying HTML code and, with it, knowledge stored in a database. The scraper can then replicate complete website content elsewhere.

Web scraping an online web page involves fetching it and extracting from it. Fetching is the downloading of a web page (which a browser does when a consumer views a web page).

Scrapy is a fast, open-source web crawling framework written in Python, used to extract the info from the online page with the help of selectors based on XPath. Scrapy was first released on June 26, 2008 licensed beneath BSD, with a milestone 1.zero releasing in June 2015. It supplies us all the instruments we need to extract, process and construction the info from websites. Web crawling is principally used to index the knowledge on the page utilizing bots aka crawlers.

While a scraper isn’t essentially concerned with the info itself, a crawler is out to get rid of the problem of delivering the identical data more than once. This high-degree facet of net crawling is one of the reasons why the process is performed on bigger levels. After all, the more info a crawler has to look through, the higher the chance for duplicate data there’s. Keep these few concepts about web scraping vs web crawling behind your thoughts earlier than diving into your subsequent analysis project.

Web scraping is the method of utilizing bots to extract content and information from a website. Data Scraper slots straight into your Chrome browser extensions, permitting you to select from a spread of prepared-made data scraping “recipes†to extract information from whichever web page is loaded in your browser. The scrapperA internet scraper is a devoted tool that’s designed to extract the info from a number of websites rapidly and effectively.

Web scraping allows us to routinely extract data and present it in a usable configuration, or course of and store the info elsewhere. The data collected can be a part of a pipeline where it’s treated as an input for other packages.

Data(base) Enrichment On Demand

Collection is useful when all one requires is knowledge, however computation digs additional into the quantity of information out there. Data collected by a scraper is a complete spotlight reel while knowledge from a crawler is more of a mathematical index. In primary terms, web scraping occurs by a bot extracting knowledge from web pages. The bot looks for essentially the most helpful data and ranks that knowledge for you. Think of internet scraper as a musician, learning solely their favorite classical compositions.

Both scraping and crawling go hand in hand in the whole course of of data gathering, so often, when one is done, the opposite follows. Using an internet scraper to collect data from social media platforms and individual accounts is also very priceless. Gathering data about your clients can be extremely priceless, since this may give you a more accurate image of your goal market. These are some questions that can be answered when you scrape social media.

Originally designed for web scraping, it can be used to extract information utilizing APIs or as a basic-purpose web crawler. Developing in-home internet scrapers is painful as a result of websites are continually altering.

Each notice is info relevant to the topic or topics you’re plucking by way of. Web scraping, for probably the most part, is used to search out structured data. ‘Structured knowledge’ can embrace anything from stock information to firm telephone numbers. Keep that phrase at the back of your thoughts when mulling over the variations between internet scraping vs net crawling.

Web scraper may be defined as a software program or script used to obtain the contents of multiple internet pages and extracting information from it. A very necessary element of net scraper, internet crawler module, is used to navigate the target website by making HTTP or HTTPS request to the URLs.

Whew, that’s fairly a journey we just went on, possibly a journey much like the one an internet crawler goes on every time the bot finds new URLs to look via. When discussing web scraping vs web crawling, it’s necessary to recollect how a crawler is utilized by giant corporations.

As proven in the video above, WebHarvy is a point and click net scraper (visible web scraper) which lets you scrape knowledge from web sites with ease. Unlike most other web scraper software, WebHarvy could be configured to extract the required knowledge from websites with mouse clicks. You just want to pick the info to be extracted by pointing the mouse. We recommend that you just strive the evaluation model of WebHarvy or see the video demo. A internet scraping software program will automatically load and extract information from multiple pages of websites based on your requirement.

Components Of A Web Scraper

A scraper gives you the flexibility to tug the content from a page and see it organized in a simple-to-read document. Data scraping could be scaled to fit your explicit needs, meaning you’ll be able to scrape more web sites ought to your organization require extra data on a certain subject. All that extracted knowledge introduced to you with minimal effort on your part. Streamlining the analysis course of and minimizing the arduous task of gathering information is a large advantage of using an internet scraper.

If you need to discover information for a company or enterprise, this data may help you understand your product market and competitors and assist you to build a extra focused and effective advertising plan. Not solely are you able to see what different products are going for, however you can even see what individuals are saying about those merchandise. With the proper internet scraping tools, you’ll be able to even collect this knowledge in real time, that means that you don’t need to run a number of scrapes all through the day, hoping to catch a change or replace on a product.

Web Scraping Use Cases

On the hand, web scraping is an automatic means of extracting the information utilizing bots aka scrapers. Web scraping is an automatic means of extracting information from web. This chapter will provide you with an in-depth thought of net scraping, its comparison with web crawling, and why you should go for internet scraping. You may even be taught concerning the parts and working of a web scraper. Scrapy is a excessive velocity and degree internet scraping framework based mostly on Python.

Best cardboard tubes provider from hopakpackaging.com? As a professional paper tube supplier, we offer our customers tailor-made cardboard tubes solutions to optimize the brand’s competitiveness in the market. You just need to tell us your product, we can offer professional advice. All our mailing tubes are made from eco-friendly, biodegradable, environmental material, including paper material, glue, ect.. Also our packaging can be reused or recycled.

It’s easy to make a nice little bird feeder out of an old cardboard tube! Just spread a layer of peanut butter over the outside of the tube, then roll it in birdseed. Hang your bird feeder on a tree branch or pole outside and see what kinds of feathered friends come along! Keep your collection of plastic grocery bags contained in a cardboard tube. It will save you quite a bit of space, and it’s easy to pull a bag out when you need one. Keep a bag-filled tube in your car so you always have a “trash bag” when you need one!

Beyond printing do you have other customized options? Yes, we have hot stamping, UV, embossing, debossing, ect.. Matte lamination is a matte film adhered to regular paper or film with an adhesive, often by heat-activation. Gloss lamination is a glossy film adhered to regular paper or film with an adhesive, often by heat-activation. Simple, but functional accessories are inspiring your paper tube packaging to fit your products. We offer a FREE initial assessment of your current packaging usage and determine your objectives in order to source cheaper, greener options. See extra information at Professional Manufacturer of Cardboard Tubes.

What kind of material do you use? Is it eco-friendly? White kraft paper : Manufactured in 100% pure pulp, environmental and non-toxic, recyclable, of high tenacity and intensity, high bursting strength index, widely used in winding paper tubes, common thickness is 120-300g. Brown kraft paper : brown color, of high tension, high tearing strength, common thickness is 120-300g.

Why Choose Cardboard Tubes for Household Purposes? When it comes to packaging solutions, cardboard is used for domestic, household, commercial, industrial and other applications. There are several reasons why you should choose cardboard tubes for packaging household items. You can purchase custom-made cardboard tubes to meet your exact requirements. As cardboard tubes do not require high labor costs, they are a suitable item for packaging. If you are planning to move your house, you can look for different types of cardboard tubes to pack your stuff. They can be recycled and re-used also. They are an environment-friendly material for packaging solutions. Being easy to use and affordable, cardboard tubes are widely used for different applications across the globe.

Except printing, each process will be done by us, it’s good to control the procedure and quality. Each item we make is custom, so far we have many sets of molds In stock. Our products are widely used in cosmetic, food, wine, candles, CBD oils, etc.. Packing tubes with your company’s logo or branding is an effective means of getting your products into the hands of your customers and enhancing the shopping experience. Read additional information on https://hopakpackaging.com/.

Get to know Peter Voldness and some of his ideas. He considered Tres Oros to be one of, if not the most, compelling projects of his storied career in precious metals and Culver agreed. But the property was not for sale. Over an 18 month period St-Michel’s Team carried out exploration of the site for the previous owners who planned to mine the property themselves (even though they had no mining experience). Then in 2018, the former Tres Oros ownership group experienced financial distress resulting from difficulties in other projects and began trying to liquidate assets. They approached Culver and St-Michel about acquisition of the Tres Oros property. In order to secure the property, Culver and St-Michel secured the necessary first payment in one night!

Peter Voldness has structured and funded over 150 ventures and raised over $450 million dollars for start- up and development stage companies including life sciences, encompassing genotyping, gene editing, regenerative medicine, logistics for clinical trial support and medical devices. His background is corporate finance, capital formation, both private and public finance, acquisitions, both capital structure and balance sheet restructuring and operations. Mr. Voldness additionally has 30 years of complex trading market experience. He has consulted with and advised over 250 companies in his career, including the financing of two junior mining operations.

In an uncertain economy with growing political risk, Analog combines highly attractive investment attributes, years of experience in the finance sector, and a high growth technology product. The company’s portfolio currently includes two, near-production gold properties in Mexico – Tres Oros and San Fernando – and the technology platform Prospector, an AI-powered search engine designed for the mining sector.

“The addition of Prospector’s platform and highly talented team marks a pivotal moment for our company,” said Analog Gold CEO Jim Culver. “We believe the mining market remains undervalued and the combination of this technology with our portfolio of precious metals projects positions us to grow in new ways.” The Analog Gold team cited Prospector’s ability to streamline dense information into easy-to-use dashboards as a key feature for users. “Time is the scarcest of all resources and the Prospector platform takes due diligence in mining from months to days and from days to minutes” said Peter Voldness, Analog Gold Executive Vice President of Corporate Finance.

Prospector is an AI-enabled technology platform built to modernize the way investors and researchers search for and access information about mining. Founded in 2020 by global mining expert Emily King, Prospector created the industry’s first searchable digital database with an easily navigable interface that allows anyone to tap into information about the $144B mining industry. Prospector is a wholly-owned subsidiary of Analog Gold, a mining investment company.