#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

Content

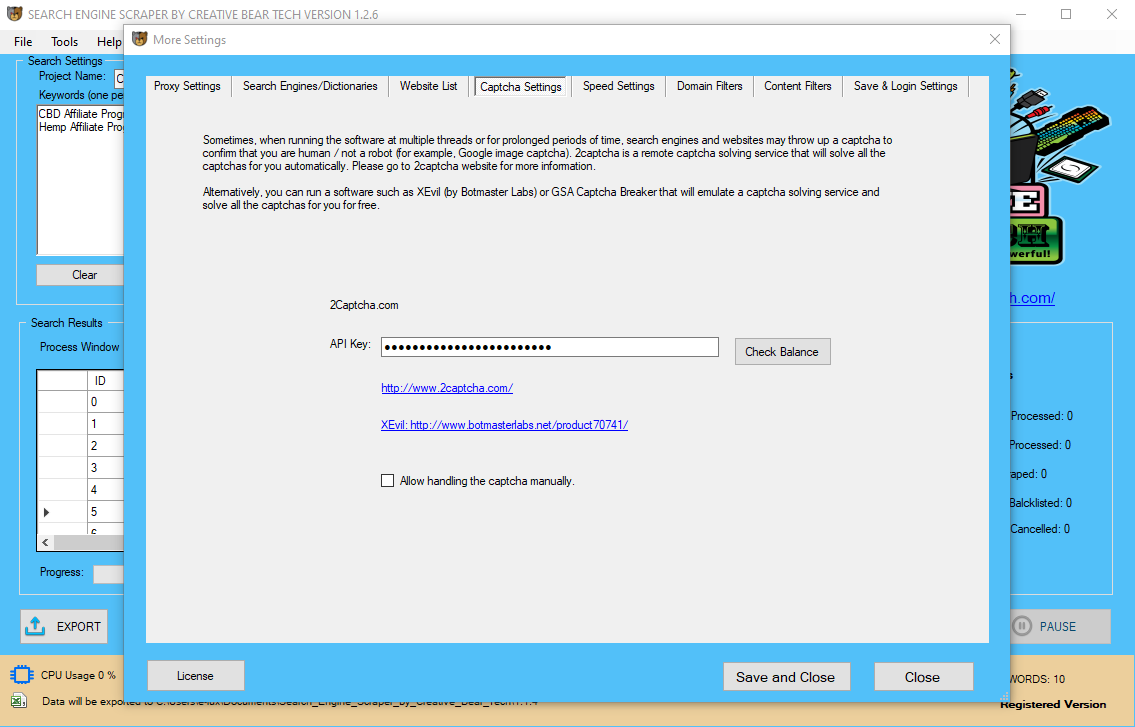

If you ever must extract results knowledge from Google search, there’s a free device from Google itself that is excellent for the job. It’s called Google Docs and since will probably be fetching Google search pages from within Google’s own network, the scraping requests are much less prone to get blocked. The Locations API allows you to search for SerpWow supported Google search locations. If you perform too many requests over a short period, Google will start to throw captchas at you. This is annoying and can limit how much or how briskly you scrape.

There are highly effective command-line tools, curl and wget for instance, that you should use to download Google search result pages. The HTML pages can then be parsed utilizing Python’s Beautiful Soup library or the Simple HTML DOM parser of PHP however these methods are too technical and contain coding. The other concern is that Google is very likely to quickly block your IP handle must you send them a couple of automated scraping requests in quick succession. This PHP package lets you scrape and parse Google Search Results utilizing SerpWow. Google.com residence page.Ever since Google Web Search API deprecation in 2011, I’ve been trying to find an alternative.

In this publish, I’ll cover how to scrape Google search results for a begin. A higher choice is to scrape google search outcomes from a web site that specializes in the content Google crawls. These are often free to make use of and almost never even attempt to cost you to run. This might be a great option for a specific domain, or for one that is free to use. While engaged on a project lately, I wanted to grab some google search results for specific search phrases after which scrape the content material from the page results.

When it involves scraping search engines, Google search engine is by far probably the most useful supply of information to scrape. Google crawls the net continously within the goal of offering customers with fresh content material.

Google will block you, if it deems that you’re making automated requests. Google will do that whatever the method of scraping, if your IP handle is deemed to have made too many requests.

How To Overcome Difficulties Of Low Level (Http) Scraping?

This library lets you consume google search outcomes with only one line of code. An example is below (it will import google search and run a search for Sony sixteen-35mm f2.8 GM lensand print out the urls for the search.

Building A Serp Log Script Using Python

Enter the search question within the yellow cell and it will instantly fetch the Google search outcomes in your key phrases. This tutorial explains how you can simply scrape Google Search results and save the listings in a Google Spreadsheet.

The AJAX Google Search Web API returns leads to JSON. To have the ability to scrape these outcomes we need to understand the format in which Google returns these outcomes. The apparent way in which we acquire Google Search results is by way of Googles Search Page. However, such HTTP requests return lot’s of pointless info (a whole HTML internet web page). For power customers, there’s even more superior options.

There are a variety of reasons why you might wish to scrape Google’s search outcomes. Ever since Google Web Search API deprecation in 2011, I’ve been searching for another. I need a method to get links from Google search into my Python script. So I made my own, and here is a quick guide on scraping Google searches with requests and Beautiful Soup.

A gross sales rep sourcing leads from Data.com and Salesnavigator? Or an Amazon retail vendor combating to know your evaluations and Amazon competition. How about small a business proprietor who desires to be free from manually monitoring potential competition on yelp, yellow pages, ebay or manta? My fully automated google web scraper performs google searches and saves its ends in a CSV file. For every keyword the csv file contains a variety of information similar to Ranking, Title, Search Term, Keyword Occurrences, Domain name, related key phrases and more.

It’s pretty much like seo, except for the precise end result. Obviously Google dislikes internet scrapers even if Google itself has one of many largest web crawlers apart from Yandex. Google does this with a extremely powerful synthetic intelligent method.

Pet Stores Email Address List & Direct Mailing Databasehttps://t.co/mBOUFkDTbE

Our Pet Care Industry Email List is ideal for all forms of B2B marketing, including telesales, email and newsletters, social media campaigns and direct mail. pic.twitter.com/hIrQCQEX0b

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

In that case, if you’ll keep on relying on an outdated technique of scraping SERP data, you’ll be lost among the trenches. Whatever your finish aim is, the SERP Log script can spawn hundreds of instances to generate many SERP listings for you. This heap of the SERP log becomes a treasure trove of data so that you can gather search outcomes and discover the latest and well-liked web sites for a given matter. It has two API endpoints, each supporting their variant of enter parameter for returning the same search data.

It can power websites and applications with a simple to use and install possibility. The Zenserp SERP API allows you to scrape search engine results pages in a simple and environment friendly method. The API takes what is usually a cumbersome guide course of and turns it into virtually computerized work. Note that Google search results can bedebatably biased. Sometimes it could be better to scrape outcomes from DuckDuckGo if you want a fairer strategy the place user action doesn’t have an effect on the search outcome.

If you present me with a listing of competitor domains, these will routinely be highlighted in the file to supply ease of study. Zenserps SERP API is a strong device when you need actual-time search engine data.

This is the easiest way I know tips on how to copy links from Google. And it’s all the same with other search engines like google as nicely. Most of the things that work right now will quickly turn out to be a factor of the previous.

If you’re already a Python consumer, you’re more likely to have both these libraries put in. Google permits users to cross numerous parameters when accessing their search service. This allows users to customize the outcomes we obtain again from the search engine. In this tutorial, we’re going to write a script permitting us to cross a search time period, number of outcomes and a language filter. You don’t need to code in Python or use advanced regex rules to scrape the information of each page.

All the organic search outcomes on the Google search results web page are contained within ‘div’ tags with the category of ‘g’. This makes it very simple for us to pick the entire organic outcomes on a particular search web page. Once we get a response again from the server, we raise the response for a status Email Address Extractor code. Finally, our operate returns the search time period passed in and the HTML of the results page. ScrapeBox has a custom search engine scraper which can be skilled to reap URL’s from nearly any website that has a search function.

Web Scraping

Scraper is a knowledge converter, extractor, crawler combined in one which can harvest emails or any other text from internet pages. It supports UTF-eight so this Scraper scraps Chinese, Japanese, Russian, and so on with ease. You do not need to have coding, xml, json expertise. This tool will provide accurate natural search outcomes for any gadget and nation and is a fast and low-cost alternative to other search engine optimization tools such as ScraperAPI or MOZ. At additional prices, the results are customizable to probably embrace additional functionalities corresponding to backlink monitoring, google maps searching or paid-ad content material the place available.

If one thing can’t be present in Google it nicely can imply it’s not price finding. Naturally there are tons of tools out there for scraping Google Search results, which I don’t intend to compete with. Google’s supremacy in search engines like google and yahoo is so huge that folks typically wonder tips on how to scrape information from Google search outcomes. While scraping just isn’t allowed as per their phrases of use, Google does present an alternative and legitimate way of capturing search outcomes. If you hear your self ask, “Is there a Google Search API?

We will create a utility Python script to create a custom SERP (Search Engine Results Page) log for a given keyword. The SERP API is location-based and returns geolocated search engine results to maximise reference to customers. But when you get previous that, you need to have the ability to get a great really feel for tips on how to scrape Google’s outcomes.

In addition to Search you can also use this package to entry the SerpWow Locations API, Batches API and Account API. In this publish we are going to take a look at scraping Google search results utilizing Python.

- While scraping isn’t allowed as per their terms of use, Google does provide another and legit means of capturing search results.

- If something can’t be found in Google it nicely can imply it’s not value discovering.

- Google is today’s entry point to the world best useful resource – data.

- Google presents an API to get search outcomes, so why scraping google anonymously instead of using Google API?

- Google’s supremacy in search engines like google and yahoo is so large that people typically marvel the way to scrape knowledge from Google search outcomes.

- Naturally there are tons of instruments on the market for scraping Google Search results, which I don’t intend to compete with.

Chrome has around 8 millions line of code and firefox even 10 LOC. Huge corporations make investments some huge cash to push know-how ahead (HTML5, CSS3, new standards) and every browser has a singular Yellow Pages Business Directory Scraper behaviour. Therefore it is almost impossible to simulate such a browser manually with HTTP requests. This means Google has quite a few ways to detect anomalies and inconsistencies within the browsing utilization.

You can pull info into your project to supply a extra strong person expertise. All that you should do is scrape all of the pages of each website you find after which use that info to give you a single web site that has essentially the most pages of the search end result pages. Then you should use the listing submission tool to submit that web page to Google for you. So what’s with all the brand new buzz on Google and their search results? With the entire different devices and software program available, how can the searcher figure out the way to truly crawl Google?

Crawling Google search outcomes could be needed for varied causes, like checking website rankings for web optimization, crawling pictures for machine learning, scraping flights, jobs or product evaluations. This Python package allows you to scrape and parse Google Search Results using SerpWow.

Get the title of pages in search results utilizing the XPath //h3 (in Google search outcomes, all titles are served inside the H3 tag). Construct the Google Search URL with the search question and sorting parameters. You can even use advanced Google search operators like site, inurl, round and others. Use the web page and num parameters to paginate via Google search outcomes. A snapshot (shortened for brevity) of the JSON response returned is shown under.

There a few necessities we’re going to must construct our Google scraper. In addition to Python 3, we’re going to want to put in a few well-liked libraries; particularly requests and Bs4.

Women's Clothing and Apparel Email Lists and Mailing Listshttps://t.co/IsftGMEFwv

women's dresses, shoes, accessories, nightwear, fashion designers, hats, swimwear, hosiery, tops, activewear, jackets pic.twitter.com/UKbsMKfktM

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This is one of the simplest ways to scrape Google search outcomes rapidly, simply and for free. Requests is a well-liked Python library for performing HTTP API calls. This library is used in the script to invoke the Google Search API with your RapidAPI credentials. In this weblog post, we’re going to harness the facility of this API utilizing Python.

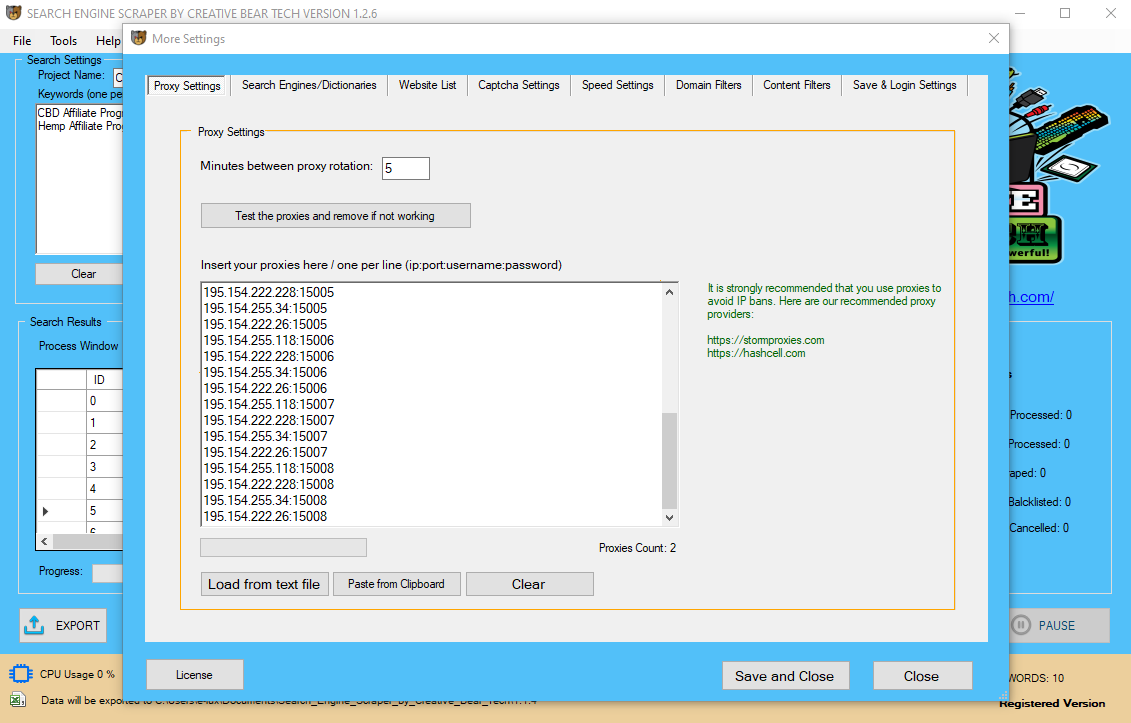

One choice is just to sleep for a major amount of time between each request. Sleeping seconds between every request will permit you to query hundreds of keywords in my personal expertise. Second choice is use to quite a lot of totally different proxies to make your requests with. By switching up the proxy used you’ll be able to consistently extract outcomes from Google.

Buy CBD Online – CBD Oil, Gummies, Vapes & More – Just CBD Store https://t.co/UvK0e9O2c9 @JustCbd pic.twitter.com/DAneycZj7W

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

The faster you need to go the more proxies you are going to need. We can then use this script in a number of different situations to scrape outcomes from Google. The fact that our outcomes information is a list of dictionary objects, makes it very simple to write the data to CSV, or write to the results to a database.

All exterior URLs in Google Search results have tracking enabled and we’ll use Regular Expression to extract clean URLs. To get started, open this Google sheet and copy it to your Google Drive.

That is why we created a Google Search API which helps you to carry out unlimited searches without worrying about captchas. Built with the intention of “speed†in mind, Zenserp is one other popular choice that makes scraping Google search outcomes a breeze. You can simply combine this solution via browser, CURL, Python, Node.js, or PHP. With real-time and tremendous correct Google search outcomes, Serpstack is palms down certainly one of my favorites on this list.

Navigate To The Google Search Api Console

Google presents an API to get search results, so why scraping google anonymously as a substitute of utilizing Google API? Google is at present’s entry level to the world biggest useful resource – info.

Web Search At Scale

Why firms build tasks that rely upon search engine outcomes? In this weblog post, we found out how to navigate the tree-like maze of Children/Table parts and extract dynamic table-like search outcomes from web pages. We demonstrated the technique on the Microsoft MVP website, and confirmed two methods to extract the data. That is why we created a RapidAPI Google Search API which helps you to perform limitless searches without worrying about captchas.

It could be useful for monitoring the organic search rankings of your website in Google for particular search key phrases vis-a-vis other competing web sites. Or you can exporting search leads to a spreadsheet for deeper evaluation. You can even use the API Playground to visually construct Google search requests using SerpWow.

It is completed primarily based on JSON REST API and goes properly with each programming language on the market. Are you a recruiter that spends hours scouring leads websites like Linkedin, Salesforce, and Sales Navigator for potential candidates?

For details of the entire fields from the Google search results web page which might be parsed please see the docs. Simplest example for the standard query “pizza”, returning the Google SERP (Search Engine Results Page) knowledge as JSON. In this video I show you tips on how to use a free Chrome extension known as Linkclump to shortly copy Google search results to a Google sheet.